This is your last article that you can read this month before you need to register a free LeadDev.com account.

Your inbox, upgraded.

Receive weekly engineering insights to level up your leadership approach.

Estimated reading time: 8 minutes

GitHub Copilot Enterprise and Pro+ users can now tap into a new class of software engineers for tasks.

Instead of assigning a junior developer straightforward tasks like documenting code or tidying up technical debt, premium GitHub users can allocate the task to an AI agent that will work asynchronously to solve the problem.

Leveraging context outlined in an assigned GitHub issue, the new Github Copilot agent – which was designed and built under the codename Project Padawan – will spin up a cloud sandbox for each assigned task, clone the repository, set up the environment, analyze the codebase then make changes as well as build, test, and lint the code, according to a blog post from GitHub. It will also update the pull request with information on its progress as it works.

But just like with any new team member, Microsoft’s public GitHub repositories show that there are still “forming, storming, norming and performing” stages with this new type of team dynamic.

The Microsoft .NET team, which oversees the open-source .NET framework, introduced the coding agent within .NET repositories on May 19, 2025, and has since seen varying levels of success with the experimentation.

GitHub outlines that the “agent excels at low-to-medium complexity tasks in well-tested codebases.” This is a sentiment echoed by Gaurav Seth, Microsoft’s partner director of product for developer platforms, who told LeadDev via email how the team has “found it to be helpful for handling time-consuming tasks like setting up environments, making changes, testing, and creating pull requests.”

Where GitHub’s coding agent gets an A+

One example of a time-consuming task that GitHub’s coding agent helps with is the creation of documentation for breaking changes, which refers to a shift that could cause other system areas to fail if they aren’t updated correctly. Traditionally, at Microsoft, when a developer makes a breaking change, they must open an issue in the documentation repository, which is then tackled manually, Seth said. For breaking change GitHub issue 46599, the team, instead, enlisted GitHub’s new coding agent.

“[The agent] identified the three relevant files, updated them based on the issue details, and opened a pull request (PR) with the proposed changes,” Seth said. “The original developer reviewed the PR, and the documentation owner approved and merged it.”

At automated software testing firm Tricentis, around 200 developers use agentic coding tools weekly, enabling them to discover what works and what doesn’t when using AI agents for coding, said David Colwell, the firm’s vice president of AI and machine learning. Issue 46599 is a great example of how asynchronous coding agents can excel when situations are explained in detail, Colwell said. The more context that’s given, the better the results, according to a blog post from Microsoft, which also recommends that prompts should be written as if “briefing a team member.”

Before merging the code for GitHub issue 46599, some “human edits” were made, which were mostly grammatical. Other changes included fixing an incorrect date input by the agent. Nandita Giri, an AI researcher and former Meta developer, said that if the developer had provided the date in the assigned GitHub issue 46599, then Copilot would have included it. “It’s all about instructions,” she added.

“That’s why [issue 46599] is a good use case of agentic independent AI because it was small enough to be easily reviewable, so the mistakes are easy to catch,” Colwell said. “It’s fairly contained, not a lot of decision-making, and you had a very well-documented description of what you want it to do.”

More like this

What about when it goes wrong?

While GitHub issue 46599 is an example of a successful use case, Colwell is reluctant to rely on independent coding agents for most tasks. He prefers to use GitHub’s agent mode within the integrated development environment (IDE), where developers can work alongside the agent for more control.

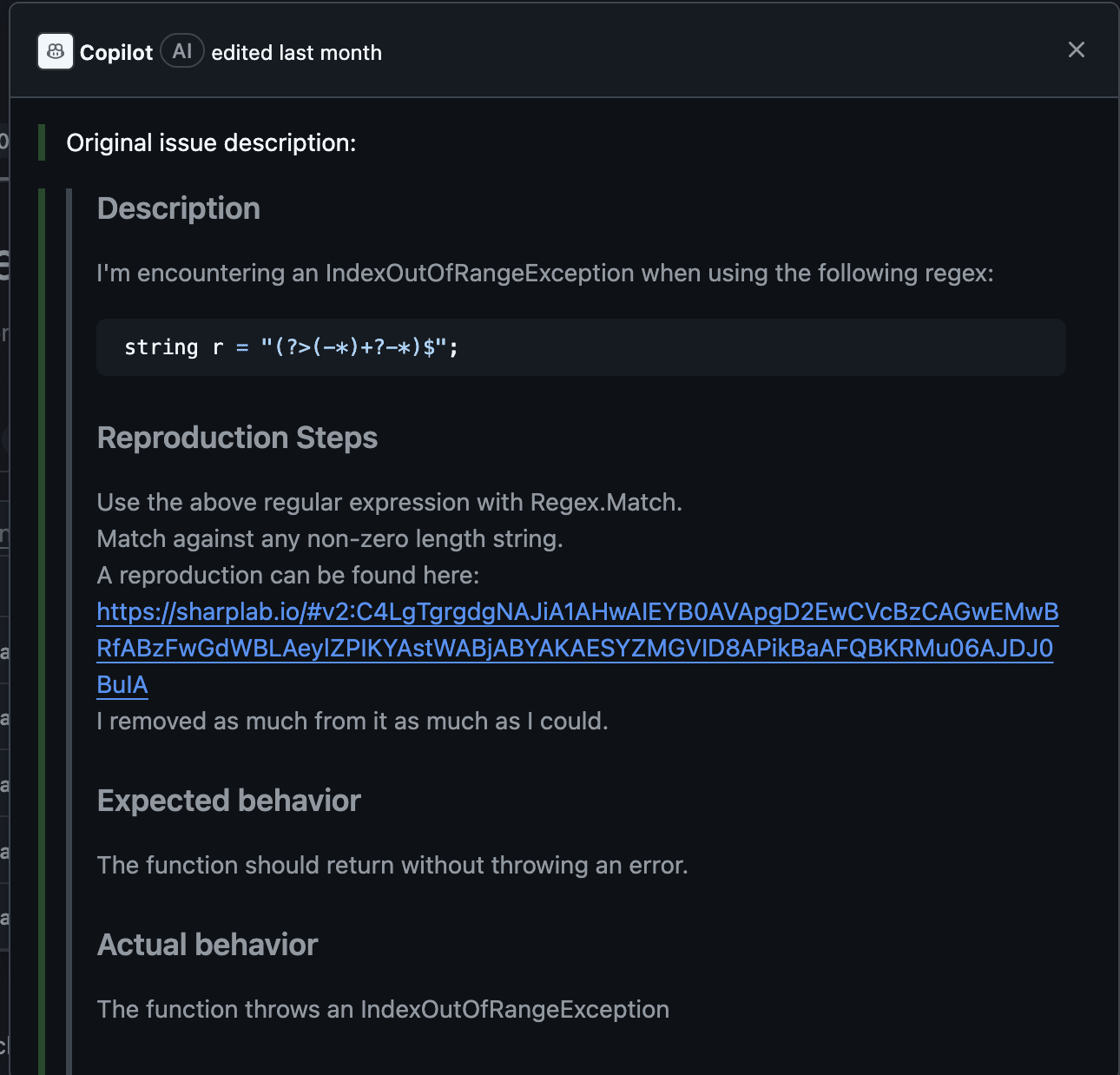

The .NET team experienced one such agent drawback when they tried to tackle an IndexOutOfRange error (issue 115733). Microsoft’s Seth did not provide commentary for this pull request.

The GitHub issue 115733 seeks to fix a problem with how Microsoft’s .NET codebase handles pattern matching using regular expressions (regex). Regex strings are shortcuts for quickly searching, validating, or even manipulating text within systems, such as validating whether an email matches a specific pattern for verification purposes. In this case, there was a hitch with matching. The code tried to process a regex pattern – “(?>(-*)+?-*)$” – and crashed with an IndexOutOfRange error, instead of returning a result saying there was no match. Giri describes this as a logic issue. Imagine you’re reading a short book and you’re asked to find a pattern: a bunch of dashes at the end of a specified paragraph. Only there aren’t any dashes. Instead of stopping when reaching the end of the paragraph, you keep looking “beyond” what’s actually there, resulting in failure because no instruction was given on what to do if there was no match.

While the agent provided a solution to this problem, comments on the commit from the .NET team show that their goal wasn’t to fix the issue for that one regex string, but to solve the underlying problem, Giri said.

Fig.2. .NET developer Stephen Toub encourages GitHub’s coding agent to think about the underlying issue (GitHub)

“Rather than saying, well, why is the iterator going beyond the end of something? [The agent] just goes, ‘oh, well, I’ll just stop it from going beyond the end of [the length of the inputted regex],’” Colwell said. “And that doesn’t fix the underlying cause.”

Even when the Copilot coding agent is asked to look at the underlying issue, it provides an answer, but does not take the initiative to implement it in the codebase.

“Instead of going, ‘let me check back and figure out what the problem is, it just goes, ‘I’ll just put a gate on it, now I’ll add tests to the gate,’” Colwell said. To elaborate: picture a cat that sneaks into your garden and destroys your rose bush. To stop the cat from coming back, you install a gate on that path and then test that gate. Everything is seemingly great, but because you didn’t fully analyze the issue, you fail to realize there are ten other paths the cat can take.

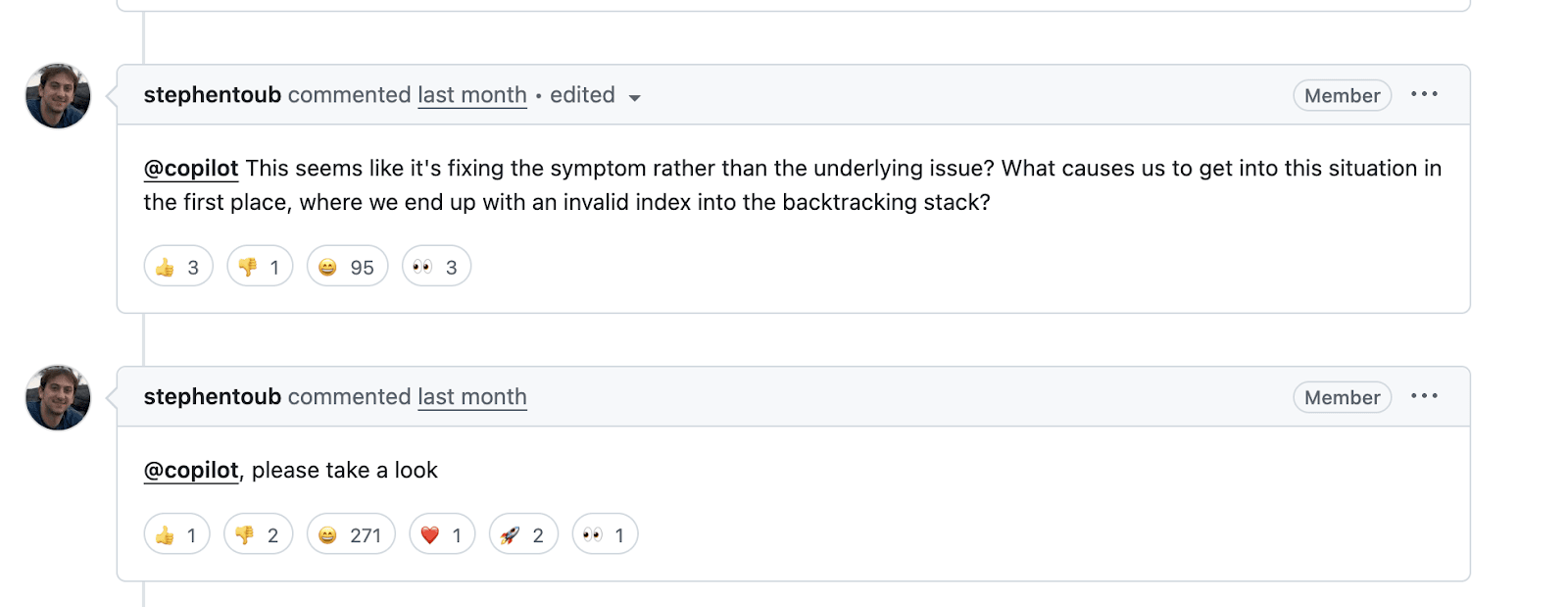

The agent didn’t really solve the issue, only one element, and created further issues for the .NET team with the addition of its tests.

Fig.3. .NET developer Stephen Toub urges the coding agent to tackle issues with tests for the pull request (GitHub)

Ultimately, the issue was closed and left unmerged after the agent couldn’t configure access to the necessary dependencies to successfully build and test, according to a commit comment from Toub.

London • June 2 & 3, 2026

LDX3 London agenda is live! 🎉

Future implications for the industry

As this issue is in a public forum, it received a strong response from the developer community, with many questioning whether AI is needed for these use cases.

“You’ll find even more senior devs will be coming in and saying, ‘I don’t even want to touch anything to do with AI anymore,’” Colwell said. “And the answer to it all is not that the AI is terrifyingly bad. It’s just that…you shouldn’t be letting it run off on its own.”

For example, if a junior developer were to be given this task, then there would be guardrails in place. Usually, they’d ask questions and, if there’s an issue, realize they need to fix the underlying cause before acting, Colwell said.

“You kind of have to keep governing [AI] as if it’s a perpetually junior developer. Now, if you have a junior developer that’s perpetually not learning, you generally fire them. It’s hard to do that with an AI when you’re mandated to use it,” he said. “But none of this is saying that AI is a bad thing. We get incredible productivity out of AI, this is saying that this is the wrong way to use AI.”

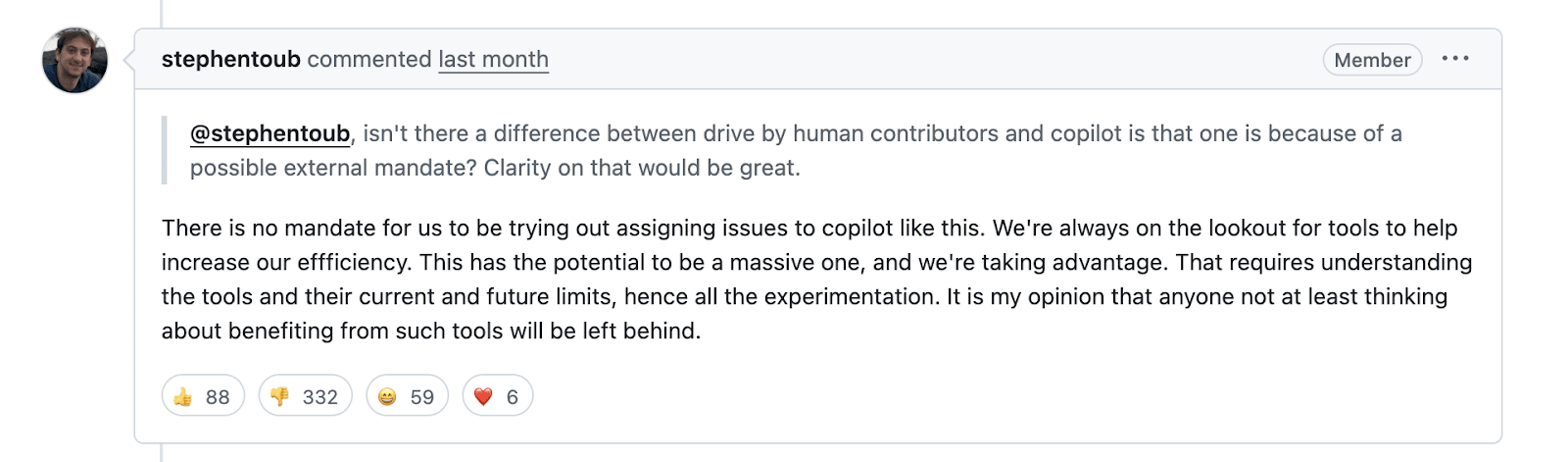

Microsoft did not respond to a request for comment on whether the firm sets any targets on usage of the Copilot agent, but in a different pull request, .NET developer Stephen Toub denies there is any mandate.

Fig.4. .NET developer Stephen Toub discussing mandate (GitHub)

Some developers have concerns about what happens if Copilot pushes code without human review in the future, or even without consent. Researcher Giri describes this concern as valid because it can break trust, and once that happens, it’s very difficult to regain. Currently, the agent’s pull requests require human approval before continuous integration workflows are run, according to a GitHub press release.

At Tricentis, if an engineer said they wanted to tackle a task using an autonomous agent from start to finish, the company would question why, Colwell said.

“No team so far has done that because every team that’s tried to do it has been like, ‘this is exhausting – just all the errors this thing is making,’” he said. “We don’t see that engineers who know what they’re doing are going to get a lot more value out of having a structured approach to agentic coding and being the owner.”

Syed Hussain, founder and CEO of decentralized AI startup Shiza, is “incredibly excited” to start using the coding agent in the development process because it streamlines the entire deployment process. Development teams can give the coding agent access to data and capabilities outside of GitHub through the Model Context Protocol (MCP), which makes it a “very powerful toolset,” Hussain said.

At Microsoft, many teams are using the coding agent, including the Azure and GitHub teams.

“As this technology is still new, we are actively experimenting with and optimizing its usage, but we are excited by the time it has saved developers, allowing them to focus on more critical and complex workstreams,” Seth said. “The .NET team will continue to share our progress openly through public GitHub draft pull requests, helping both our team and the industry learn how to maximize the benefits of the coding agent in GitHub Copilot.”