This is your last article that you can read this month before you need to register a free LeadDev.com account.

The consulting giant kicked a hornet’s nest when it launched a framework to measure software developer productivity. Here’s what engineers think they got wrong.

The debate over how to effectively measure productivity in software engineering has been going on for as long as lines of code have been written, but now, as the technology sector faces the end of an extended period of hyper growth, the demand for actionable productivity metrics feels more urgent than ever. This August, consulting giant McKinsey announced its own solution to the problem in an article titled, Yes, you can measure software developer productivity, to a, let’s say, mixed reaction.

Engineering leaders immediately took to their keyboards to explain what the consultants had gotten so wrong about developer productivity. But in an industry that continues to be rocked by mass layoffs, where teams are left to try and do more with less while running more and more complex codebases, the need for effective measurement of engineering organizations isn’t going anywhere. And, if the engineers won’t do it, the consultants will.

McKinsey’s examination of developer productivity

Developer productivity can be a tricky concept to define. Performance metrics specialist Swarmia describes it as systematically eliminating anything that gets in the way of delivery. And, in a world where agile methods have long taught us that you can’t improve what you can’t measure, you first have to identify blockers before you can remove them.

To do this, McKinsey has chosen to build on two popular engineering metrics frameworks: DORA and SPACE.

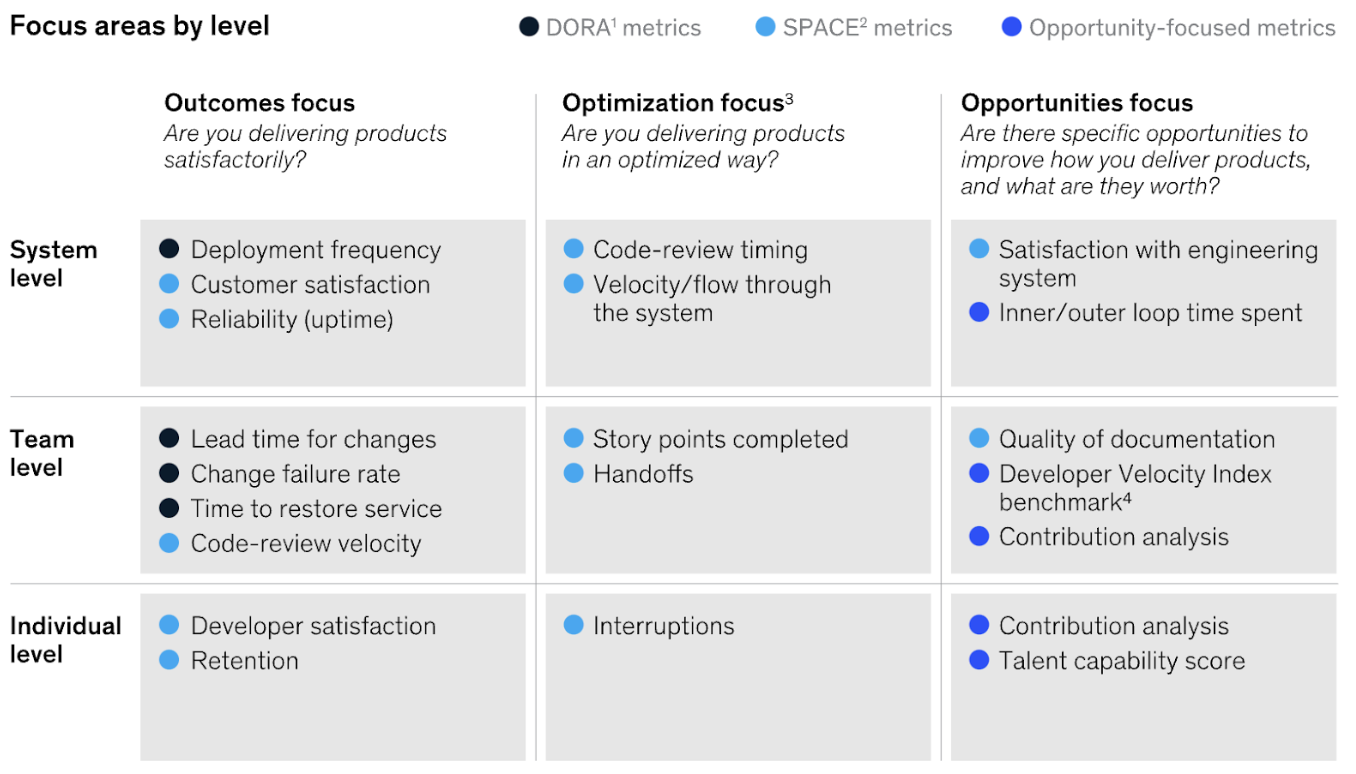

The McKinsey framework includes a “non-exhaustive” table of the way the DORA, SPACE, and McKinsey opportunities metrics are organized across the system, team, and individual levels.

DORA metrics came out of Google’s DevOps Research and Assessment team in 2014 to measure how effectively engineering and operations were working together to deliver software based on:

- Change failure rate

- Failed deployment recovery time (until recently, called mean time to recovery (MTTR))

- Deployment frequency

- Lead time

Then there is SPACE, where some of the original DORA authors came together in 2021 to suggest 25 sociotechnical factors that fall into the below five buckets:

- Satisfaction and well-being

- Performance

- Activity

- Communication and collaboration

- Efficiency and flow

These two sets of metrics are the closest thing that the tech industry has to an agreed-upon standard, the McKinsey paper argues. Then, the consultants have added four more metrics, which focus on opportunities for performance improvements:

- Inner/outer loop time spent

- A Developer Velocity Index (DVI) benchmark

- Contribution analysis

- Talent capacity score

“On top of these already powerful metrics, our approach seeks to identify what can be done to improve how products are delivered and what those improvements are worth, without the need for heavy instrumentation,” the McKinsey authors write, to “create an end-to-end view of software developer productivity.”

As the extreme programming creator Kent Beck, and Pragmatic Engineer newsletter author Gergely Orosz wrote in their detailed two-part response, the McKinsey framework only measures effort or output, not outcomes and impact, which misses half of the software developer lifecycle.

“Apart from the use of DORA metrics in this model, the rest is pretty much astrology,” said Dave Farley, co-author of Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation in his response to the article. While he argues that evidence-based metrics can serve as the foundation for experimentation and continuous improvement, McKinsey’s framework doesn’t attempt to understand “how much culture and organization affect [an engineering team’s] ability to produce their best work.”

Also, during a time of cutbacks and layoffs, productivity scores run the risk of creeping up into performance reviews, which, Beck said, will distort the results. “The McKinsey framework will most likely do far more harm than good to organizations – and to the engineering culture at companies. Such damage could take years to undo,” Orosz and Beck argue.

Opportunity metric #1: Inner/outer loop time spent

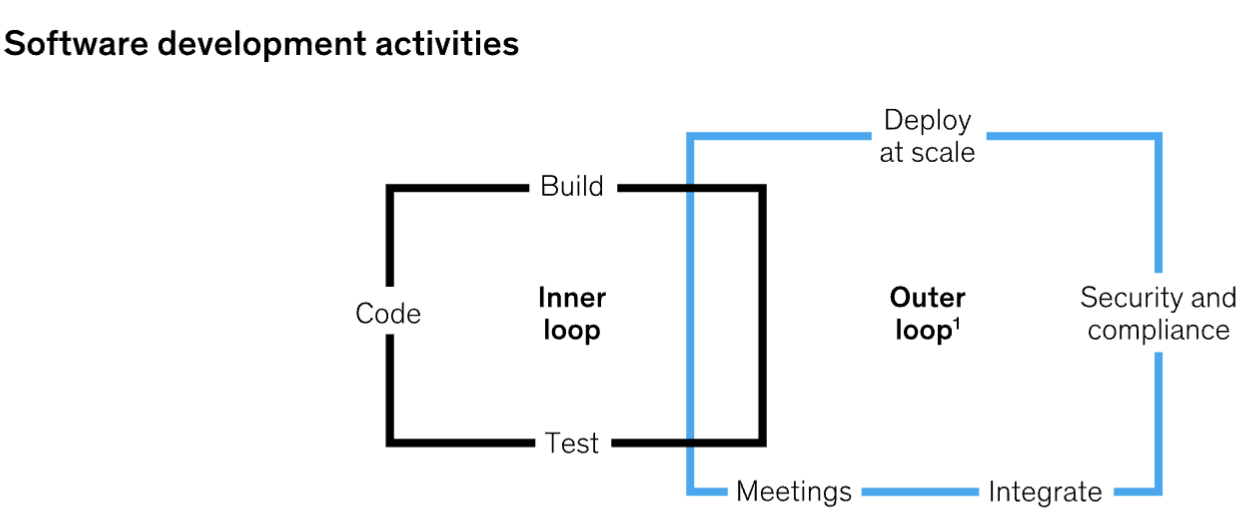

McKinsey illustrates the “non-exhaustive” inner and outer loop software development activities.

McKinsey argues that developers should spend at least 70% of their time on “inner loop” tasks. These are anything that contributes towards the building, coding, and testing of an application. That leaves the outer loop tasks, which the authors call “less fulfilling,” to include meetings, integration, security and compliance, and deploying at scale.

While modern approaches to software development, from DevOps to platform engineering, have sought to decrease repetitive work and cognitive load on developers so that they can focus on problem-solving, McKinsey argues that the objective of productivity measures is to incentivize developers to code more. This diminishes the idea that developers are creative workers.

Also, by distancing security and compliance from developer work, McKinsey is going against the grain of incentivizing greater security awareness in dev teams. While only 3% of developers want to be responsible for security, when 84% of security breaches happen at the application level, teams need continuous visibility into whether or not they are complying with standards.

We know that developers would rather be in fewer meetings, but by labeling them as outer loop activities, McKinsey risks further demonizing meetings, which can range from 1:1 mentoring, to pair programming, team retrospectives, and post-mortems. These are essential rituals for forming team bonds, sharing information, improving quality, and aligning engineering with business goals.

Opportunity metric #2: DVI benchmark

The quarterly developer survey is one of the most common ways to qualitatively measure developer experience to date. Since 2014, McKinsey’s DVI has analyzed 13 capabilities composed of 46 individual performance drivers in an attempt to measure an enterprise’s technology, working practices, and organizational enablement. This index can be used to benchmark against peers and highlight velocity improvement areas, be it through backlog management, testing, or security and compliance, for example.

However, beyond the three dimensions of technology, working practices, and organizational enablement, the DVI methodology appears to be limited in its scope. As software engineer Eric Goebelbecker wrote, velocity can easily become an inconsistent measurement that can sacrifice quality for speed. Like many of the McKinsey metrics, it can be easily gamified by padding out story points. “Velocity isn’t an accurate reflection of your engineering orgs’ productivity, and it can – more often than not – lead to bad-quality products and burnt-out developers,” he wrote.

Opportunity metric #3: Contribution analysis

McKinsey identifies individual contributions to the team’s backlog in the hope of surfacing “trends that inhibit the optimization of that team’s capacity.” The aim of this activity, the article explains, is so that team leads can “manage clear expectations for output and improve performance as a result,” as well as identify opportunities for upskilling, training, and rethinking role distribution.

For Eirini Kalliamvakou, staff researcher at GitHub, this mixing of productivity metrics and performance is a red flag. This is in part because productivity tracking is often associated with “counting beans,” be it individual tasks, or how much time it takes to do things.

“That sort of approach is something that developers kind of hate. They find it anxiety-inducing. They find that it doesn’t really do justice to how multifaceted and complex their work is,” Kalliamvakou said in a Good Day Project webinar. “They have seen it in the past used against them. The simplification of their work doesn’t really work in their favor. And to them, it feels a lot like spying, like somebody’s watching how much of a thing they are doing or how long it took them to do a thing.”

While this statement was made in 2021, it encapsulates the suspicion that software developers have with the kind of approach McKinsey is espousing. This is especially true in 2023, where the fear of layoffs remains acute. After all, as Beck and Orosz point out, one popular reason CTOs want to measure developer productivity is to identify which engineers to fire.

The SPACE framework goes out of its way to warn about measuring developers by story points completed or lines of code. “If leaders measured progress using story points without asking developers about their ability to work quickly, they wouldn’t be able to identify if something wasn’t working and the team was doing workarounds and burning out, or if a new innovation was working particularly well and could be used to help other teams that may be struggling,” the framework authors wrote.

In the article, McKinsey includes an anonymous “success case” of a customer who, through the contribution analysis, realized that “its most talented developers were spending excessive time on non-coding activities such as design sessions or managing interdependencies across teams.” The company subsequently changed its operating model to enable those highest-value developers to do more coding.

This again contends that coding is the most valuable thing a developer does. That doesn’t scale and is a quick way to lose talent. By focusing on the individual contributions and not the team, this approach fails to include essential glue work like code reviews, mentoring others, and experimentation. It also devalues work like design sessions, which help make sure you’re building something users actually want, instead of wasting time on an unnecessary solution.

“Your highest-value developers are 10x by enabling other developers,” Dan Terhorst-North, an organizational transformation consultant, wrote in his review of the McKinsey article. “You appear to be adopting a simplistic model where senior people just do what junior people do but more so, which means the goal is to keep their hands to the keyboard. The whole push of the article is that the best developers should be ‘doing’ rather than thinking or supporting,” he wrote.

Opportunity metric #4: Talent capability score

It’s difficult to know how much engineering talent you need. McKinsey’s talent capability score attempts to map the individual knowledge, skills, and abilities of an organization to identify opportunities for coaching and upskilling.

The problem is, “software development doesn’t scale well by adding people and the evidence that we do have also says that success isn’t based on having the right engineering talent,” Farley said, citing both The Mythical Man-Month and the more recent Google re:Work project. These contend that project success is predicted by how the team organizes its work and how much trust they have in each other, elements that are clearly absent from McKinsey’s framework.

It’s also unclear at this stage how much of this metrics gathering is communicated to engineering teams at all. Most developer productivity metrics, including those outlined in SPACE, kick off by asking developers about what they need. This is an important step in building psychological safety between managers and their teams. The McKinsey framework feels too external to the teams they are measuring the opportunities of. If developers are reasonably distrusting of productivity metrics and efforts, it’s important to explain from the start what you are trying to accomplish, lest you damage trust.

Where’s the culture?

By ignoring the social aspects of the sociotechnical software development endeavor, McKinsey is falling back on the perception that developers are most valuable when they are coding.

Peter Gillard-Moss, head of technology at Thoughtworks Technical Operations, wrote that McKinsey misses the importance of going beyond metrics to create cultures of engineering excellence. “Without cultivating the right culture, we cannot expect meaningful impact on the business,” he wrote.

The Association for Computing Machinery (ACM) published a paper in May titled DevEx: What Actually Drives Productivity. This contends that developer experience and productivity are intrinsically linked via three dimensions:

- Feedback loops – how quickly can developers deliver work into production

- Cognitive load – how much do developers have to learn to actually get value to production

- Flow state – free of distractions and unplanned interruptions to work

This paper argues that in order to improve developer productivity, organizations need to remove friction and frustration in what lead author Abi Noda called a “highly personal and context-dependent experience.”

The importance of culture on productivity simply can’t be overlooked. The 2023 State of DevOps report found that a high-performing organization that cultivates a generative organizational culture – one grounded in high trust and information flow – experiences 30% higher performance, with a dramatic increase in productivity and job satisfaction, alongside a decrease in burnout.

“[The article] misses the need for capable engineering leads and engineers who have developed the deep instinct to understand how to use a metric appropriately, when to take it seriously, and when to ignore it,” Gillard-Moss said. “Most importantly, how to develop the intuition throughout the engineering organization. Data is fantastic, but you need humans who can use the data to make good quality decisions.”

Focusing on the individual is detrimental to team productivity

The term ‘developer productivity’ itself may be part of the problem. Instead, organizations should aim to measure software development team enablement to identify processes, working styles, technology, and culture changes that allow development teams to do their best work.

For Noda, “McKinsey’s methods directly contradict those of leading tech companies like Google, Microsoft, and Atlassian – which, rather than attempting to measure individual output, focus on understanding and improving developers’ experiences,” he said. “By addressing developers’ biggest sources of friction and frustration, these companies are able to consistently produce the most productive development teams.”

“Measuring software development in terms of individual developer productivity is a terrible idea,” Farley said. “Being smart in how we structure and organize our work is much more important than the level of individual genius.”

Final thoughts

By conveniently ignoring the possibility that developers are human beings who can be motivated or demotivated by productivity metrics, the McKinsey approach is an unwelcome return to command-and-control button pushing during a time of heightened tension for software developer teams.

While engineering leaders continue to look for ways to measure and improve the speed at which teams release high-quality software to users, this framework dangerously focuses on simple outcomes that overlook the importance of developer happiness, collaboration, and teamwork when it comes to producing better software, faster.

McKinsey declined to comment.