You have 1 article left to read this month before you need to register a free LeadDev.com account.

If the role of a good cloud architect is to design and build cost-effective software, is there a formula to achieve that goal every time?

A successful enterprise software application must effectively reflect the appropriate business context. But how do you ensure this is the case at the architecture phase?

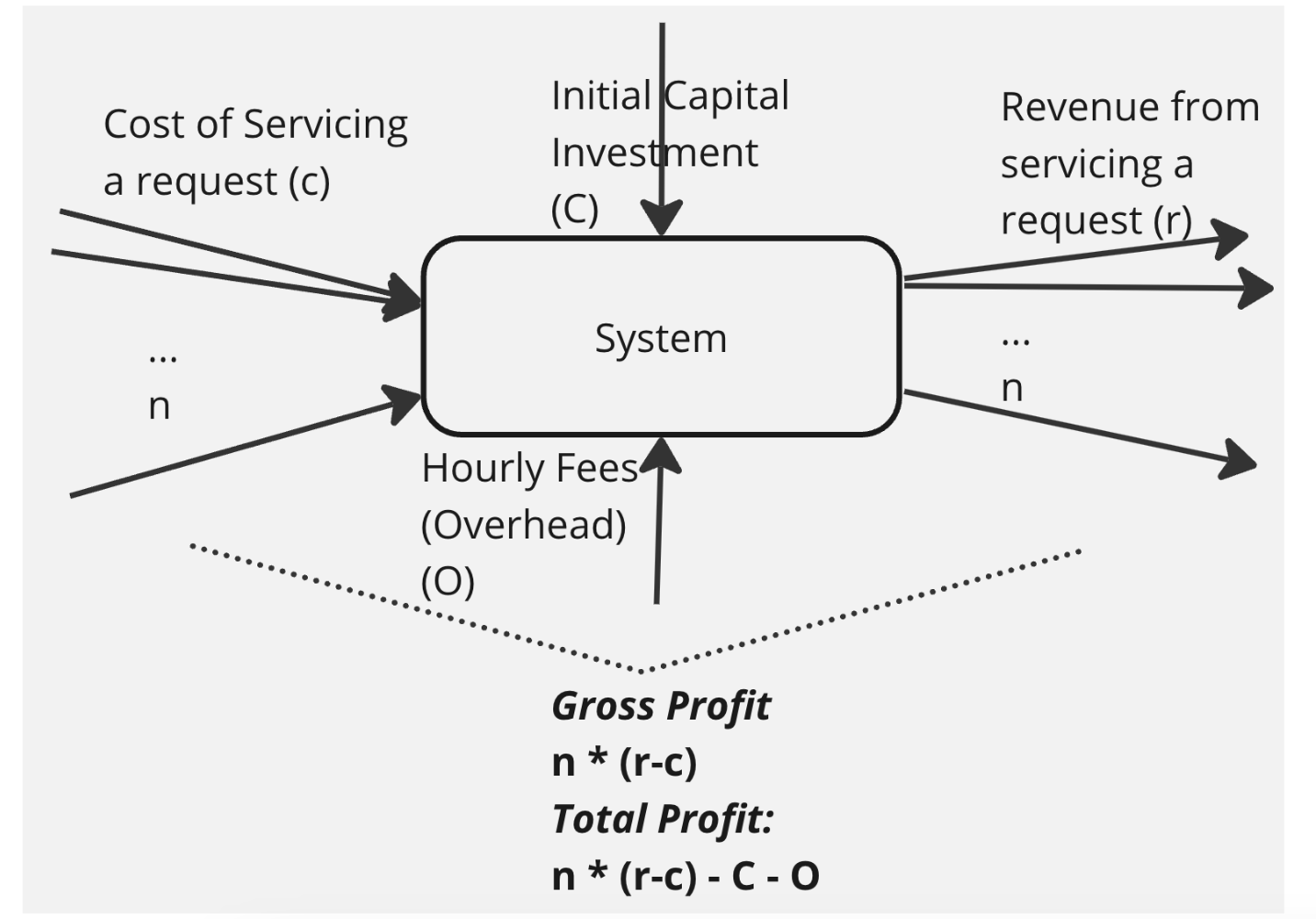

I find it helpful to start by creating an oversimplified business model at the design stage. For example, using this model, a business owner invests capital to establish a factory that transforms raw materials into finished products sold at a reasonable price. Additionally, the business has ongoing operational costs, such as rent and utilities.

The role of technical architects is to consider the initial outlay, the per-request fees, and the operational costs to build the most profitable system possible.

Figure 1: The economics of running a software business. Funds allocated to establish the system represent the initial capital investment. Initial capital sets up the system. Overhead costs remain stable no matter the traffic. Costs and earnings per request show the business’s profit margins.

Funds allocated to establish the system represent the initial capital investment. Initial capital sets up the system. Overhead costs remain stable no matter the traffic. Costs and earnings per request show the business’s profit margins.

To represent this business model, I’ve formulated an equation that I’ll reference in this article as the “profit equation.”

profit – n X (r – c) – C – O

Cost Centers

If the primary responsibility of an architect is to increase profitability, the profit equation helps in identifying costs, which include:

Initial Capital (C)

Historically, tech companies spent a lot of money on data centers. Amazon Web Services (AWS) changed the game by allowing businesses to rent parts of its expansive cloud infrastructure and only pay for what they use.

Hourly Costs (Operational Costs) (O)

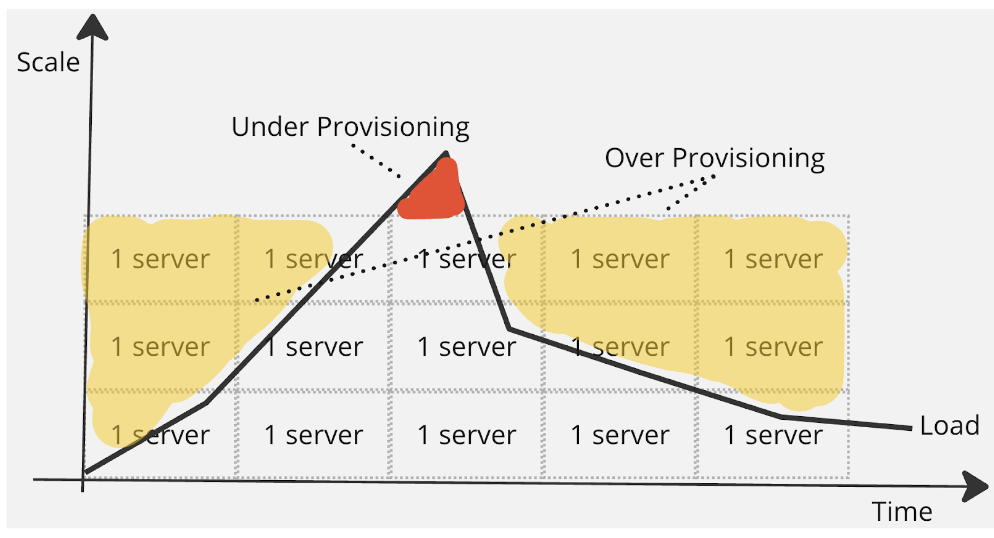

Once your code is written, it’s time to deploy. In a physical factory, there are machines; in the digital realm, we have servers. An application may need to be constantly accessible, requiring consistent infrastructure and incurring steady costs. As user numbers grow, additional servers might be needed, increasing costs. These costs rise in chunks, similar to factories adding machines during high demand, and are known as “step costs.”

Request Costs (Raw Materials) (c)

While operational costs have this step-like pattern, request costs are linear. Whenever a user sends a request, think of it as using a unit of raw material. You pay for components like bandwidth and data transfer. If your traffic skyrockets, these costs rise in direct proportion.

Managing Resources

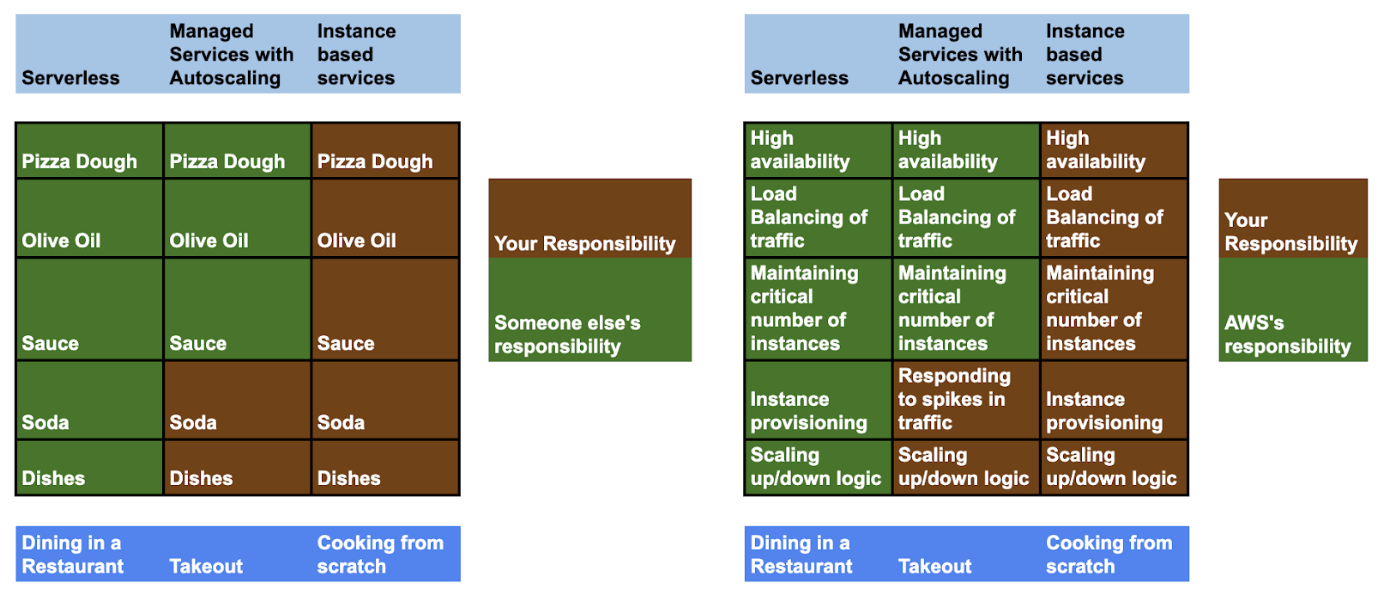

In designing applications, architects must balance cost, efficiency, and scalability. Selecting inappropriate resources can disrupt this harmony. You can choose to outsource some tasks to your cloud provider – like scaling and administration based on chosen resources – while also maintaining control in areas you prioritize.

The AWS Shared Responsibility Model

AWS specifically operates a Shared Responsibility Model (SRM), where it clearly defines the areas of control it undertakes. This gives architects three clear options regarding how much they manually control and where they hand off to the cloud provider.

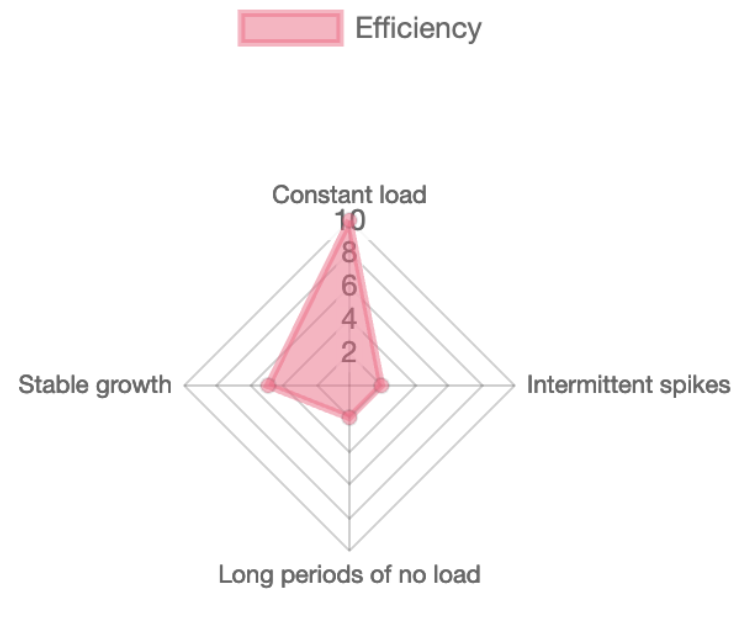

First, you can opt to provision servers for incoming traffic manually. These servers come with a fixed hourly rate regardless of the number of requests. When these servers are near their capacity, you must scale them up. Depending on the resource, you might have to do this manually, impacting efficiency.

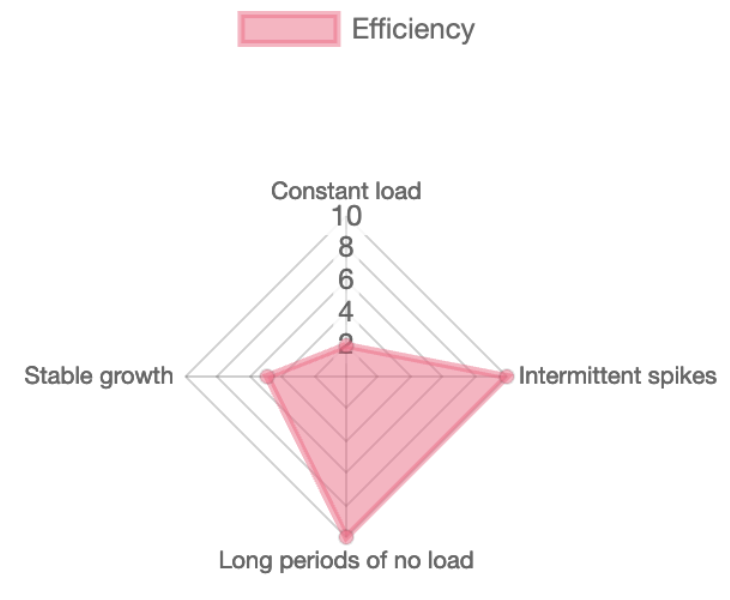

Figure: With a steady load, instant-based resources are more efficient due to planned costs. But if the load changes, manual scaling may be required.

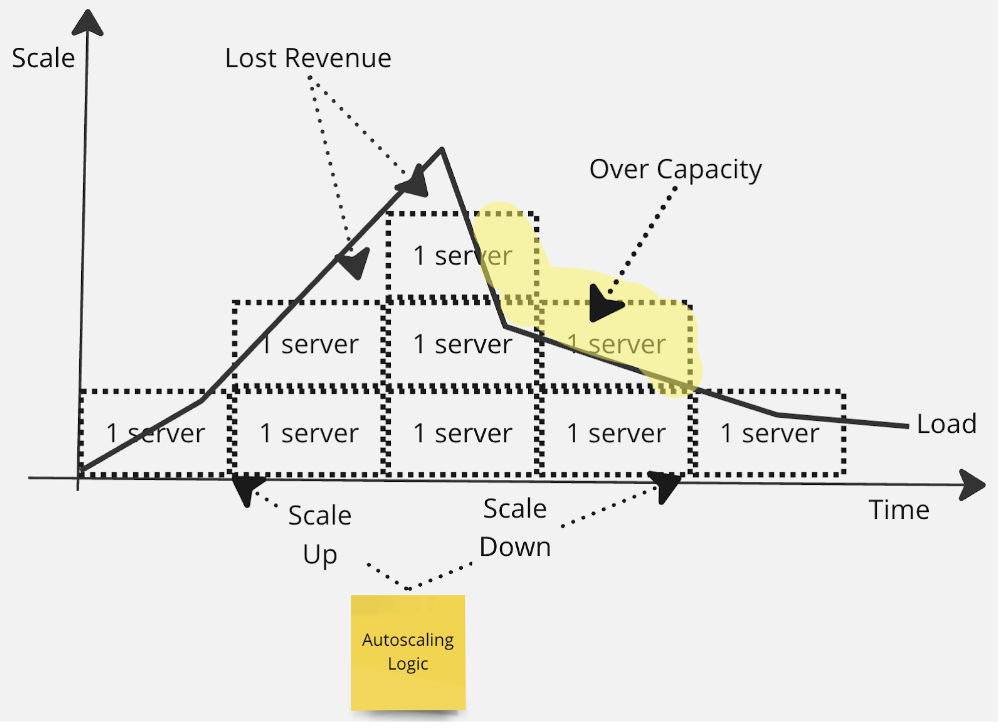

Alternatively, many cloud resources offer autoscaling, letting you adjust server capacity automatically based on factors like utilization or a set schedule. While initial setup is necessary, the cloud provider tweaks your server capacity according to your autoscaling guidelines.

Figure: If traffic growth is steady, a simple autoscaling policy can scale up the system automatically and downscale when there are periods of no load.

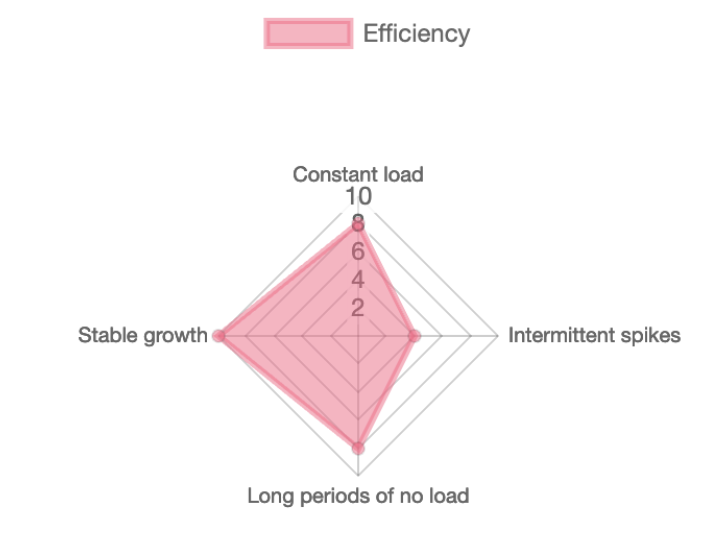

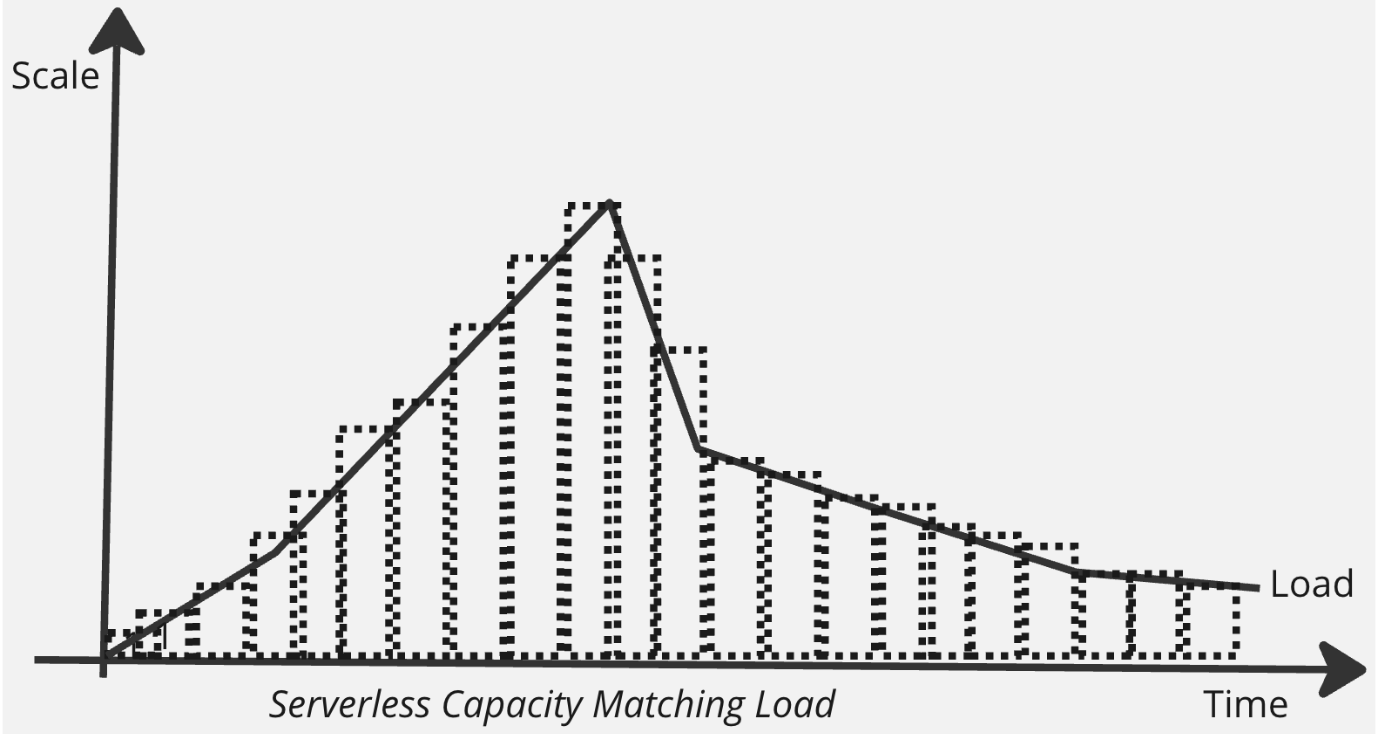

At the other extreme are serverless resources, where the cloud provider takes the reins, handling scaling, management, and security. You are charged a premium based on the number of requests, and while these resources excel in specific functions, they’re not as flexible.

Figure: These systems are designed for spiky traffic.

The system scales up when traffic spikes. In the event of zero load, the system scales down to zero without charging you.

While resources with instances have an initial cost, serverless resources can get expensive with a higher volume of requests. Therefore, the cost-effectiveness of your setup often hinges on your request volume and your ability to predict it.

Figure: Pizza as a service vs. Software as a service

Architecture strategies

The key to selecting the best strategy is to identify where your business aims to stand out and where it’s willing to make concessions.

Upfront investment

If you are making large investments upfront, success depends on accurately forecasting traffic trends. Architects and developers work together to predict future traffic needs. They then invest accordingly to support expected traffic, ensuring enough capacity.

This approach suggests a substantial investment in (C) to reduce our profit equation’s per-request cost (c). When (n) is large, the cost of (C) gets distributed over the multiple requests, leveraging economies of scale.

Steady stalwarts

Another strategy is to be steady stalwarts, which seeks to balance the flexible pay-as-you-go model with a fixed upfront investment. This starts with an estimated demand and adjusts capacity as needed if changes are steady and foreseeable. Autoscaling is generally used to adjust capacity in response to traffic changes.

This approach adjusts (O) by balancing between (c) and (C). If there are unexpected traffic surges, you might face situations of having too much or too little capacity. Thus, this strategy best suits platforms with consistent traffic.

This approach adjusts (O) by balancing between (c) and (C). If there are unexpected traffic surges, you might face situations of having too much or too little capacity. Thus, this strategy best suits platforms with consistent traffic.

Flexible spending

For customers who prioritize flexibility and the ability to scale rapidly, the primary challenge is accurately predicting traffic or load. Such customers could benefit from using serverless resources to mitigate the risk of unreliable forecasting.

If you exclude initial costs (C) and overhead (O), your expenses align directly with the volume of requests you get. More requests (n) mean higher costs. Serverless options usually cost more than fully utilized instance-based resources. This method allows businesses to delay scaling until they experience maximum traffic.

Scalability

The magic wand that lets your application handle more user requests is scalability. Think of it like a highway. The more lanes (servers) you have, the more cars (requests) you can handle. But every lane (scale) comes with a price. While aiming for higher profits, managing the number of lanes is crucial.

To break it down, the profit equation is (n) times the difference between revenue (r) and cost (c). You’ll only make a good profit if you earn more (r) than you spend (c) for each car on the highway (request).

However, as you add more lanes, the maintenance cost rises, shrinking the gap between revenue and cost. Thus, the benefit gained from each added lane reduces over time. Eventually, adding another lane might not be worth the cost. For architects, this poses a dilemma. Should they continue to add lanes even if it’s not profitable?

And here’s a twist: not every loss is about money. What if a car (request) gets turned away? It could tarnish the brand’s image or affect other system parts. While counting pennies, remember to weigh the non-monetary costs, too.

In my experience, it’s vital for architects to consider expanding the highway or closing temporarily for maintenance. Sometimes, the best growth strategy is to pause and recalibrate.

What should architects do?

Imagine building software as a high-stakes game of chess. You’ve got to make trade-offs or strategic choices tailored to your business’s unique playbook.

Do you have a crystal ball that predicts growth? Go ahead and double down on beefy instance-based infrastructure. Not sure what the future holds? You might want the agility of a serverless setup. In my playbook, it’s often wise to dip your toes in the serverless waters first. This gives you the freedom to experiment without going all-in.

If you end up picking the wrong path, hit pause and retrace your steps. When you get it right, the best systems work like well-oiled machines – each piece complements the other, and the whole thing just purrs.