You have 1 article left to read this month before you need to register a free LeadDev.com account.

Estimated reading time: 8 minutes

AI continues to act as a double-edged sword – capable of generating “new” legacy code, but also allowing us to tackle “old” legacy code in new ways.

Ever since AI’s entry into the mainstream in 2023, it has transformed the software industry. While claims that engineers will be replaced seem increasingly far-fetched, there can be little doubt that the technology of large language models (LLMs) dramatically redraws what’s possible. One of the clearest examples can be seen in how AI impacts one of software engineering’s most persistent challenges: dealing with legacy code.

What is legacy code?

Legacy code means different things to different people:

- Old code using antiquated formats.

- “Someone else’s code,” especially from developers who’ve long since left.

- Scary code – difficult to read, confusing, and hard to maintain. Sometimes, it’s a mix of all three.

But these definitions share one problem: they’re entirely subjective.

Michael Feathers offers an objective alternative in his seminal book Working Effectively with Legacy Code: legacy code is simply code without tests. When considered from this angle, we can see AI’s impact on this aspect of software development in two key ways:

- It creates code without tests, thus generating more legacy code

- It allows us to tackle legacy code in new and exciting ways

Your inbox, upgraded.

Receive weekly engineering insights to level up your leadership approach.

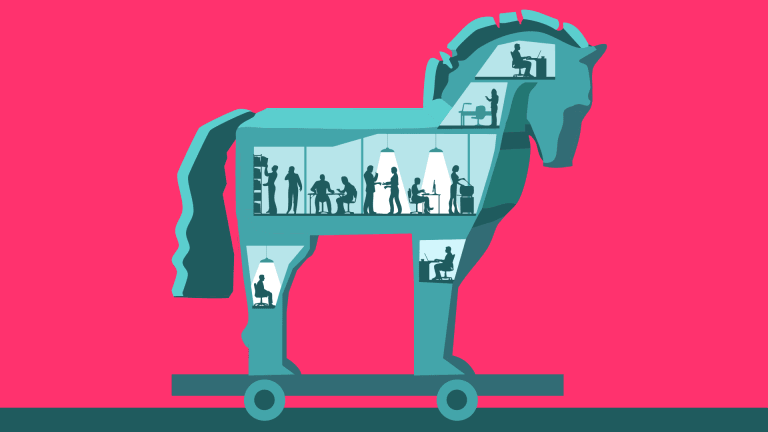

AI as a legacy generator

By Feathers’ definition, most AI-generated code qualifies as legacy from day one. “Vibe-coding” tools – from ChatGPT to Copilot to agentic builders like Replit and Bolt – rarely write tests unless explicitly prompted.

For prototypes and small projects, this is fine. Greenfield codebases that aren’t optimized for serious future development are the agentic code generator’s sweet spot today. But prototypes that generate real business value don’t stay prototypes – they become production systems that need maintenance.

This creates a significant challenge. AI dramatically expands who can write code and what problems can be solved. But much of this new code, especially from non-engineers, will be legacy code by definition as soon as it’s created.

In an interview with Rob Woodhead, Cofounder of digital agency Old Street Labs, he said he was seeing an uptick in clients coming to him with functioning prototypes built with LLM-powered tools.

In one case, a client came to them with a vibe-coded app with ten paying customers for their application, that he didn’t want to touch, lest he breaks the existing functionality. In effect, he had created a brand new legacy codebase.

In many cases, Woodhead says, these applications need to be rewritten from scratch. The good news is that the investment in doing so is much easier to justify with a market-validated prototype. Previously, non-technical founders would often turn up with an idea for an app and little more – a much riskier endeavour.

AI unlocks so much more code to be written, so many more problems to be solved: problems that were previously prohibitively expensive in terms of dev time and resources. It expands the size of the pie dramatically. But that expansion comes with a cost: a lot of that code will lack the test coverage that separates maintainable software from legacy nightmares.

More like this

AI as a solution for legacy code

If AI generates far more legacy code than ever before, can it also come to the rescue?

Understanding legacy code

AI has proven effective at making legacy code comprehensible. Speaking with Andrew Rahn, Head of Engineering at Modern Logic, he described several key benefits when working with unfamiliar or legacy codebases.

AI helps developers orient faster in a new codebase by inferring patterns, frameworks, and conventions without manually reverse-engineering everything. When Rahn encounters a “what the hell is this function doing?” moment, dropping code into an LLM provides plain-English explanations and breakdowns of complex logic, especially useful in gnarly areas where names, patterns, or structure are poor.

AI can also generate summaries of key files and how parts of a system interact, even when the full project is hard to build or reason about due to complex legacy build chains. Perhaps most valuable is encoding learnings: after repeated corrections, Rahn and his team have AI generate rules files (like Cursor rules) that capture those lessons, so the assistant “remembers” them for future work on that codebase. The challenge of documenting and sharing context about legacy codebases across a team can now be increasingly automated through AI.

Rahn is not alone in seeing the value of AI when it comes to legacy code. Thoughtworks reports that using generative AI to understand legacy codebases has moved from experiment to “practical default.” They have successfully used AI to assist with mainframe modernization projects, working with decades-old systems written in languages like COBOL and PL/SQL.

These older languages tend to be less self-descriptive than modern code, making them particularly challenging to understand. By combining LLMs with other techniques such as retrieval augmented generation (RAG) and knowledge graphs that preserve information about how the code is structured and how different parts connect, they have found AI to help developers understand complex legacy systems.

Enabling large-scale refactoring

While LLMs alone may struggle to accurately refactor code at scale, when combined with deterministic structure and human oversight, new possibilities are unlocked.

Airbnb’s engineering team migrated about 3,500 React tests from Enzyme to React Testing Library. They treated the migration like an assembly line: each file moved through simple steps, and if a step failed, they tried again. To help the model make better changes, they included more of the surrounding code in the prompt – sometimes up to 50 related files. This mix of small, repeatable steps, automatic retries, and richer context let them convert most files quickly and safely.

Their first bulk run achieved 75% success in four hours. They then identified common failure points in files the LLM would get stuck on, picked some files that had these points, tweaked the prompt and then reran against these files to validate that the updated prompt worked. Running this “sample, tune, sweep” loop over four days pushed completion to 97%. The last 3% was done manually by humans. The migration was originally estimated at 1.5 years of engineering time; it was completed in 6 weeks.

Even more ambitious is monday.com’s Morphex, an AI-powered migration system, which tackled breaking apart their massive JavaScript client monolith – a task estimated to take eight years. They completed it in six months. Existing AI tools weren’t sufficient for such scale, so they built a hybrid system combining AI with deterministic orchestration and rigorous validation loops. Morphex gives each file a score based on how tricky and important it is. It starts by extracting the highest‑scoring files first. After each extraction, the system recalculates the scores for the remaining files, because removing dependencies often makes other files easier to extract next.

Each step includes validation – linter passes, tests, code review – and any failures from the previous attempt are passed back to the AI, so the next try can fix those specific issues. The system adds “human to-dos” to flag areas requiring manual review, effectively giving AI the tool to “activate a human” when needed. Once operational, Morphex extracted 1% of monday.com’s codebase in a single day.

These successes share critical characteristics: clear existing patterns, systematic engineering approaches, extensive validation, and human oversight. AI excels at local refactors but struggles with architectural decisions. Massive rewrites are now possible at unprecedented scale – but only when teams establish good patterns and validation frameworks first.

Limitations and risks

For all its value, AI is no silver bullet. AI assistance comes with significant risks that temper the optimism.

Hallucinations remain the biggest danger. AI can confidently generate code that looks legitimate but is subtly wrong. As Feathers himself noted, the “hallucination problem” means “you ask a question, you get an answer, and you just really don’t know whether it’s right or not.” Without rigorous testing, these subtle bugs can slip into production.

Context limitations matter. Most LLMs struggle to ingest a large codebase in one go. They operate within limited context windows, meaning the AI only knows the snippets you’ve shown it. If a bug or design concern lies in a different module you didn’t include, the AI won’t consider it. AI excels at local reasoning – one file or function at a time. Add in the fact that legacy systems frequently rely on complex build processes to create a functioning artefact, and the limitations of LLMs are clear. Even as context windows expand, AI will struggle to encapsulate all the human complexities of the business in which the codebase and application is situated.

Human oversight is non-negotiable. Perhaps the most repeated advice from practitioners: keep a human in the loop. Real-world testimonials, like that of Bhagya Rana, describe both “magic” and “chaos.” Where the AI may accelerate tasks like adding docstrings, it also makes changes that subtly break logic or introduce new bugs.

These tools are excellent “refactoring assistants, not replacements,” and using your own “brain for sanity checks” is still required Rana says.

Indeed, in some cases, as Martin Fowler, chief scientist at Thoughtworks has noted, they are slower and less reliable than existing refactoring tools. Rahn agreed with this sentiment, saying: “I review everything, because I just do not trust the AI to do it correctly.”

And perhaps most importantly: AI-generated code still needs tests. By Feathers’ definition, you don’t escape legacy status without them.

London • June 2 & 3, 2026

LDX3 London agenda is live! 🎉

Final thoughts

AI takes the problem of legacy code within software development to a new level – both as an accelerant and balm. It will generate unprecedented amounts of untested code. But it also gives us unprecedented power to understand, document, and refactor the legacy code we already have.

As with any transformative technology, this disrupts the economics of software development. The fundamental trade-offs that define the craft remain: there is still a need to balance long-term code quality against immediate delivery. But the scale and speed at which we can operate have changed dramatically.

AI can usher in a new age of legacy slop or empower us to modernize our software more effectively than ever before. The difference lies in judgment and discipline: requiring tests, maintaining human oversight, and treating AI as the powerful assistant it is.