You have 1 article left to read this month before you need to register a free LeadDev.com account.

It’s possible to have a large codebase that isn’t cumbersome to work with by setting a technology vision, distributing the work effectively, and tracking progress publicly.

Contrary to popular belief, it is possible to have a large, complex codebase that is both enjoyable and productive to work with. Furthermore, you can avoid big rewrites, complex architectures, and slow development.

I didn’t always believe this was possible. In past jobs where I would be stuck working with legacy code, I‘d often ask myself: how did we get here? How did experienced and well-meaning developers end up with this mess?

Over time I came to realize that codebases naturally have a negative inertia, in that they tend to get worse over time. Once the code gets fragile and slow to change we‘re forced into risky fixes, lengthy rewrites, stopping feature work to focus on improvements, and large architecture changes.

The key to thriving with large legacy codebases is to reverse that inertia. If we can reverse the natural decay of our code so that it improves over time, we can take advantage of the benefits of our legacy code, without the huge cost of managing it.

Here’s a simple framework for accomplishing this reliably.

- Set a vision

- Distribute the work

- Track progress publicly

Set a vision

When parts of a codebase have ended up in a bad state, it’s usually because it wasn‘t clear where the codebase was supposed to go. Are we moving our whole frontend to React? Should we be building microservices, or are we building a monolith? REST APIs or GraphQL? Client-rendered UI or server-rendered UI? This lack of technical direction creates uncertainty and leads to teams moving in different directions. When you finally do figure out where you‘re going, you are immediately saddled with ”legacy code“ that needs to be managed or migrated.

At small companies this sort of pivot can be done over a drink, or during a stand-up. However, if you can’t have a stand-up, or drinks, with every developer in the organization, you need to bring together the people who can make the decision for where the codebase needs to go. There will likely be multiple different improvements you want to make to the codebase at any one time, so prioritize these. Once youæve agreed on where to go, you need to communicate this to every team and ensure they will commit time to it.

Done early enough, this may be all that needs to be done. No REST endpoints were written, or GraphQL resolvers if that‘s your choice, and nothing needs to be migrated.

Unfortunately, in the real world, these decisions are rarely made in advance. Sometimes we are focused on more urgent concerns, but often we just don‘t have enough information to make a decision yet. This means we need to migrate our legacy code to match our vision.

Distribute the work

The main reason code improvement projects don‘t get done is that they tend to be large and daunting.

Here’s an example. When we decided to adopt the Flow type checker into a project, one developer took on the task and completed most of the work in a single day. Recently we decided to migrate away from Flow to TypeScript. With the size of our codebase and our rate of shipping new code, this was now a massive project. A single developer would never be able to complete it. Even a full team dedicated to the project would struggle and take a long time. The key to making these projects successful is to distribute the work to the folks with the most context. This is where it helps to have clearly defined code ownership, but that‘s a topic for another article.

At a high level, I‘ve seen two approaches to tackling these kinds of big projects. The first is to have a single person, or group tackle a migration project from start to finish, as we did when we introduced Flow type checking. The second is to distribute the work to the teams most familiar with the code, or data, that needs to be migrated. This is the approach we took when we migrated to TypeScript.

Many organizations are too quick to reach for the first option, likely because it is easier to organize and execute. In large codebases no single group has enough context to safely and efficiently make complex changes to all the code, making any change riskier.

By distributing as much of the prep work as possible, we can leave the final step to a smaller group. For example, when we needed to update the Flow type checker, we had teams update the types in their own code. Once all types were updated, a single developer handled the final cleanup and updating of the Flow package.

Track progress publicly

One of the reasons we avoided distributing work in the past is that it‘s hard to see an easy path to coordinating all of the work. It turns out that, once again, the mechanisms are quite simple, if not always easy.

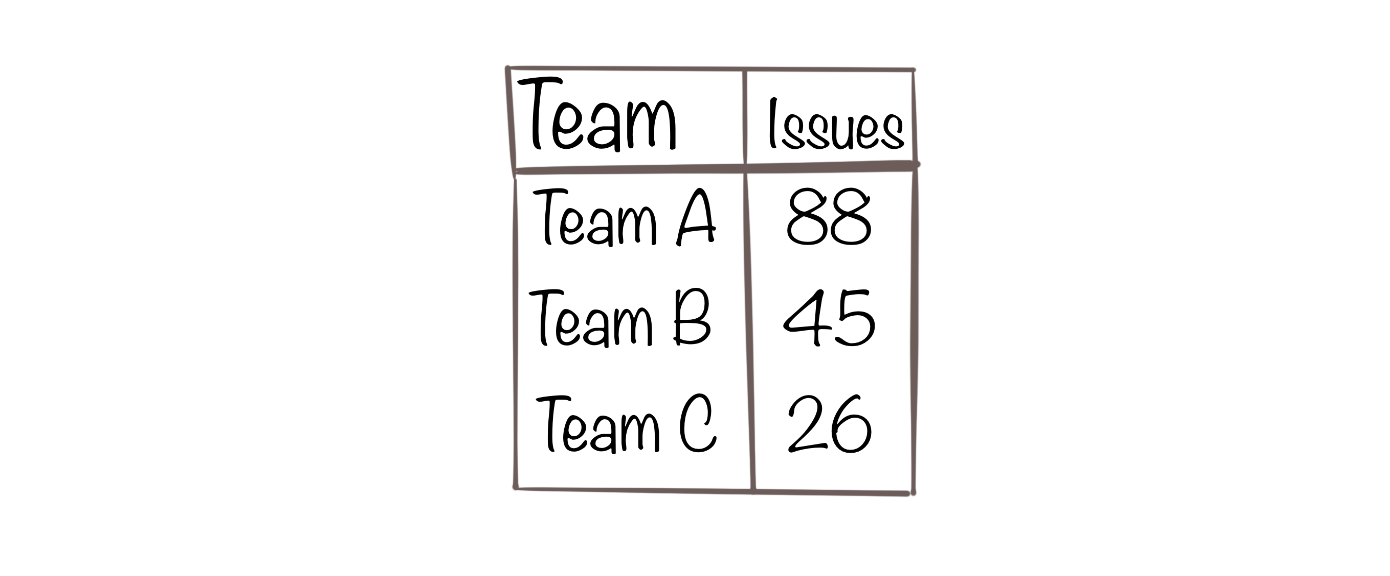

This simple table shows how much work each team has remaining. This could be the number of endpoints without tests, the number of type check failures with a newer version of Flow, or the number of tests that fail on a newer version of Rails. Importantly this table shows how many issues each team is responsible for fixing. Don‘t overthink this. You can maintain this list in a spreadsheet and manually update it. The most important thing is that the teams are looking at it often. In the past, we‘ve used a monthly all-hands meeting to highlight our collective progress.

Automating progress tracking

While manually updating this table works, it can quickly become time-consuming for more complex projects. We started writing simple CLI scripts to generate the progress numbers. This not only simplifies the process of keeping the numbers up to date, it also allows teams to run the script themselves and see not only their real-time progress, but exactly which files and lines of code they need to fix.

Reversing inertia

Once we found a way to automate tracking, we searched for better ways to surface these issues. We‘ve used linter rules, and CI scripts to surface issues in the past. This means teams will see these issues as they modify or update old code and will fix them as they go. Over time these issues will slowly be resolved, leading to a general improvement of your codebase.

Being intentional about where you want your codebase to be years from now and creating simple feedback loops for your team can put your legacy codebase on a path to success. It will improve over time, without large and costly interventions. Teams can continue to be productive while working on it, and you’ll be able to take advantage of new technologies to stay competitive and avoid unexpected productivity blockers. Not least, of all your codebase will continue to be enjoyable to work with.