You have 1 article left to read this month before you need to register a free LeadDev.com account.

Just how bad is the mean time to repair (MTTR) problem? And how can engineers circumvent common issues to reach their MTTR goals?

Companies everywhere are counting on cloud-native architectures to enable engineers to deliver exceptional customer services more efficiently so they can both grow top-line revenue and protect the bottom line. Yet when incidents occur, those same technical teams are finding it nearly impossible to remediate issues quickly.

The recent 2023 Cloud Native Observability Report survey of 500 engineers and engineering leaders at US-based companies revealed just how serious the mean time to repair (MTTR) problem has gotten.

Missing MTTRs is shockingly common

In fact, 99% of companies are missing MTTRs. Disappointingly, only 7 out of 500 respondents believed that they were meeting or exceeding their MTTR goals.

Here are the top three reasons why we are seeing organizations aren’t meeting their MTTR goals and what you can do to remediate customer issues faster.

1. MTTR is often poorly defined

The concept of MTTR dates back to the 1960s, when it was used by the Defense Technical Information Center to assess the reliability and availability of military equipment. As system software and application performance have risen in importance, this term has been adopted by engineering teams. Unfortunately, it’s morphed over time and no longer has a consistent definition.

MTTR can sometimes be defined as “mean time to restore”, “mean time to respond”, or “mean time to remediate”.

However, MTTR is properly defined as “mean time to repair”. It is the measurement of the time it takes between an issue occurring and being fixed.

The problem is different companies start and stop the MTTR clock at different points. Some measure the time it takes to get systems back to some operational semblance, or the time it takes to completely fix the underlying problem. And your company can only benchmark repair time against itself.

2. Cloud-native complexity is more challenging than anticipated

Enterprises have been rapidly adopting cloud-native architectures in a bid to increase efficiency and revenue. Of the survey respondents, 46% are running microservices on containers, and 60% of respondents expect to be running this way in the next 12 months.

While yielding positive benefits, the continued shift to cloud-native environments has also introduced exponentially greater complexity, higher volumes of observability data, and increased pressure on engineering teams supporting customer-facing services.

There is no doubt that cloud-native complexity makes finding and fixing issues harder. In fact, 87% of engineers surveyed say cloud native architectures have increased the complexity of discovering and troubleshooting incidents. The inability to successfully address cloud-native complexity can have significant negative MTTR, as well as broad customer and revenue impacts.

Cardinality is a mathematics term that describes the number of unique combinations you can achieve with a single data set. Here’s a very simple explanation:

- If two people each have 20 ice cream cones, but one person has five flavors and three sizes, the unique number of combinations they could have is 15.

- If the other person has only five flavors all one size, the number of unique combinations is five.

- Each person has the same volume of ice cream cones, but one has a lot more complexity.

In the observability world, instead of flavors and size combinations, it’s time streams. For example, if one engineer decides to add universally unique identifiers (UUID) to their metrics, suddenly your cardinality explodes, catastrophically slowing down systems and driving up costs.

3. Observability tools are failing engineers at critical times

The 2023 Cloud Native Observability Report also reveals the observability tools that companies put in place before cloud-native growth that should be helping their engineers achieve their MTTR goals aren’t working as promised.

Individual contributors surveyed identified a host of shortcomings with their current observability solutions, ranging from too much manual time and labor, to after-hours pages for non-urgent issues, to insufficient context to understand and triage an issue.

Engineers and developers agree that observability tools take a lot of time and manual labor to instrument, often page for alerts that aren’t urgent or don’t give enough context, and are too slow to load dashboards and queries.

Alerts also aren’t working. Over half of the respondents said that half the incident alerts they receive from current observability solutions aren’t helpful or usable. Alerts that show context linking them to services or customers affected give engineers more actionable dashboards, helping companies to significantly shrink time to remediation.

Implement fixes faster

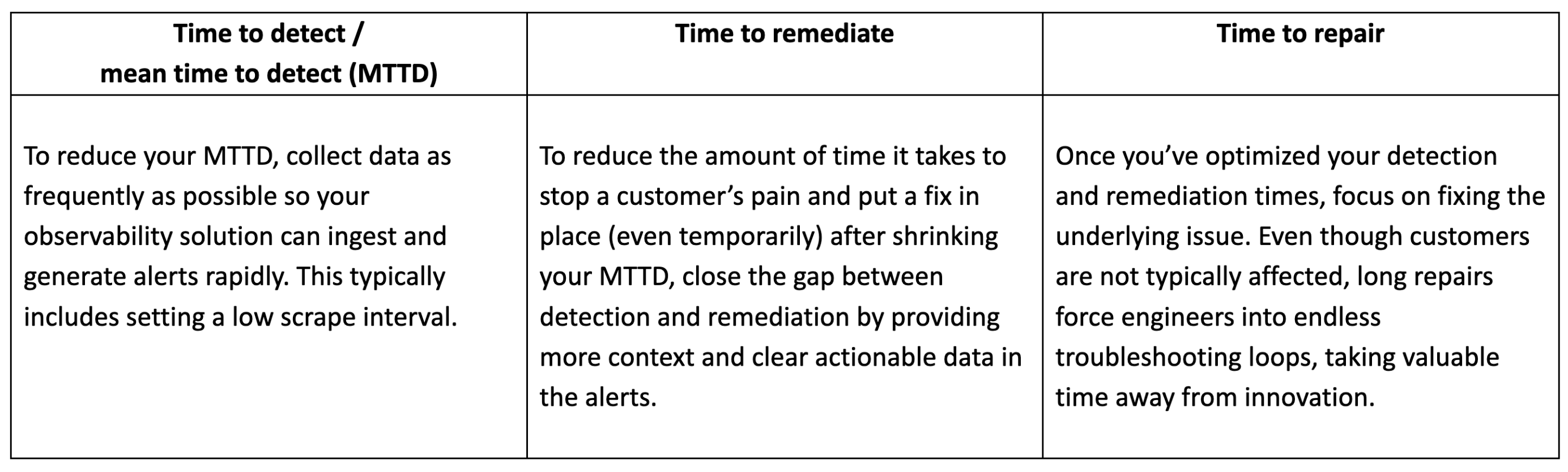

To get better at achieving your MTTR goal, your organization needs to find ways to shorten the three key steps of the MTTR equation:

For example, Robinhood, which operates in the highly-regulated fintech space, had a four-minute gap between an incident happening and an alert firing. By upgrading its observability platform and shrinking data collection intervals, Robinhood reduced MTTD to 30 seconds or less.

If you have existing observability capabilities – alerting, exploration, triage, root-cause analysis – failing your engineers during critical incidents, it’s highly unlikely your teams will meet their MTTR targets.

Access more insights from the 2023 Cloud Native Observability Report and learn how you can boost your MTTR success rate at Chronosphere.io.