You have 1 article left to read this month before you need to register a free LeadDev.com account.

Current hiring processes are rife with pedigree bias — systems that assess someone not on what they can do, but where they’ve previously studied or worked.

The global hiring market is at the forefront of a fundamental shift. Fueled by technology’s incredibly rapid pace and demand for digital-first business models, companies everywhere are struggling to hire highly qualified technical talent at scale.

Despite investments in core systems and advancements in technology, many organizations continue to rely on antiquated philosophies and processes to fill some of their most mission-critical roles. These philosophies and methods are rife with pedigree bias, resulting in an inequitable hiring process that overlooks, under-estimates, and under-represents top technical talent.

A refresher on pedigree bias

When hiring, quick judgment calls are often made about whether someone is right for a company based on limited information commonly found in a resume or CV. In theory, both offer the information needed; however, in practice, it’s hard to digest.

Ultimately, hiring managers and recruiters often end up relying on resumes to check a candidate’s educational and professional background. If the candidate studied at a “good school” or worked at a company with a “good reputation,” that’s often considered evidence they will be successful in a new role.

This outdated practice is insidious because it isn’t entirely wrong. Candidates who studied at certain schools and worked for certain employers are more likely to succeed than a random selection of the population at large. But, at best, this approach fails to highlight what’s actually important: whether the candidate can excel in the advertised role.

Additionally, the school that a candidate attended and where they previously worked are heavily influenced by socioeconomic factors. By amplifying the selection biases present at these institutions, we create a limit on the fairness of our own process. We can never be fairer than the input processes on which we rely.

How resume screenings reinforce pedigree bias

Filtering candidates based on resumes is a difficult problem because resumes are unstructured and uncalibrated. The content within them can be challenging to compare. For instance, a developer who just graduated from college might state that they are an expert in Java, but someone with years of experience might describe themselves as moderately skilled – simply because the experienced developer has more awareness of the size and scope of a problem.

Companies and schools that have proven, prestigious reputations are seen as “safe bets” when it comes to quelling the chaos associated with hiring. It’s reasonable to base decisions on our past experiences with other professionals and graduates from these organizations. It gives us a sense of their standards for excellence; however, this creates pedigree bias.

This bias is particularly hard to fight because of how early it occurs in the hiring process. We can put in a lot of work to make our interview process fair – only to deeply diminish its effectiveness by pre-screening out many candidates for biased reasons.

To truly solve this problem, we need to assess candidates differently early in the hiring process using a method that doesn’t rely on whether a candidate’s background happens to be similar to those we’ve already worked with or met.

The journey toward the skills economy

In the current economic climate, businesses are optimizing their hiring processes in order to attract the best candidates. They are keenly interested in hiring professionals who can demonstrate their skills and deliver quickly. Alex Kaplan, an IBM executive who leads the company’s digital credentials team and consulting talent transformation group, believes the world of work is shifting to an economy “where skills themselves are the currency of the future”.

Essentially, Kaplan believes that companies will assign work and hire for roles based on how an individual’s skills fulfill the needs of a specific opportunity and successfully advance the company’s future. This hiring trend creates a distinct and urgent need to validate a candidate’s skills with efficiency and ease so companies can hire at scale.

The solution that accelerates the evolution

As an interviewing company, we partner with Fortune 100 and 500 organizations to fairly source, screen, interview, and evaluate technical talent. By leading over 300,000 software engineering interviews for our customers, we understand that hiring technical talent at scale is both an art and a science. It requires work to make the hiring process fair, predictable, and enjoyable for candidates.

We believe that keeping the applicant pool as large as possible in the early stages of the hiring process actually leads to finding the most highly qualified candidates. Assessments that give a large group of applicants a chance to demonstrate their skills and expertise — instead of relying on a resume to spotlight where they’ve worked and studied — can become a powerful and invaluable tool.

It’s possible to construct a more equitable experience that lets candidates showcase their capabilities without pedigree bias by combining Item Response Theory (IRT) and Computer Adaptive Testing (CAT).

The combination of IRT and CAT creates a data-driven approach that reveals a person’s aptitude in a certain skill set, as opposed to solely relying on CVs and resumes. Because past education and work experience aren’t considered and the candidate is only assessed on their skills, it eliminates the paths by which pedigree bias enters the process.

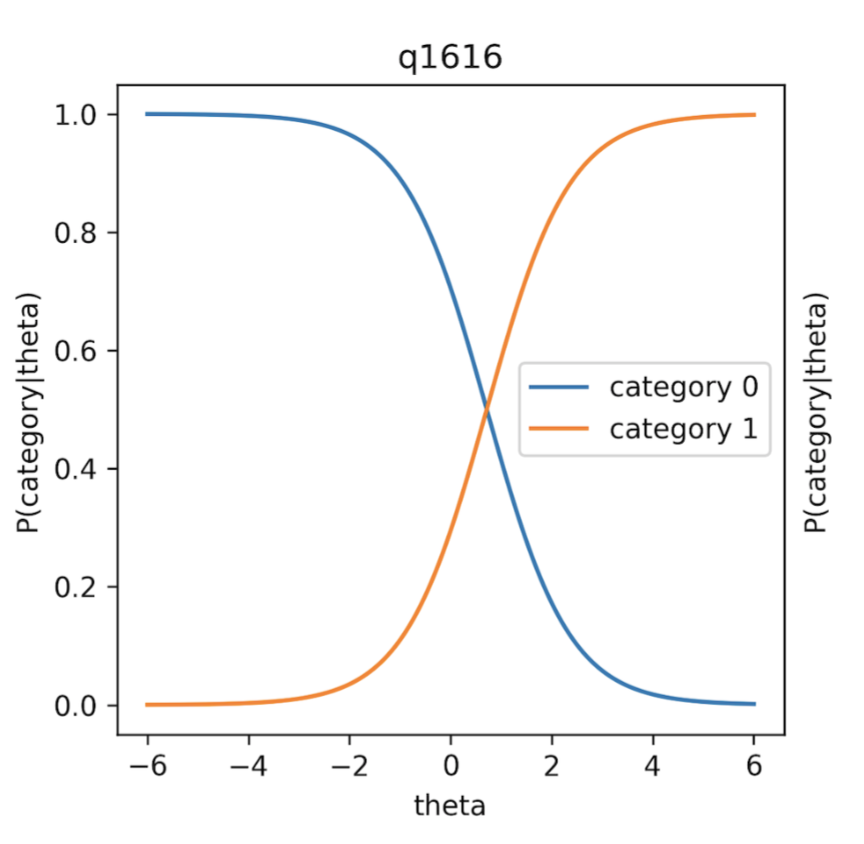

Using IRT to unveil latent traits

IRT is a mathematical model most commonly used for tests like the graduate management admission test (GMAT) and the graduate record examination (GRE), but in these circumstances, it is used to understand “latent traits” through demonstrated performance. A latent trait is a characteristic or quality that cannot be directly observed. For example, fluency in a language is a latent trait, as it’s not overtly determinable but still easy to find out about someone over the course of a conversation.

In the tech industry, IRT would be used to observe coding skills. IRT questions in an assessment would be calibrated for:

- Difficulty – What percentage of people will generally answer a specific question correctly at a certain ability level?

- Differentiation – How well does the question distinguish candidates of contrasting abilities?

IRT can be visualized along a calibration curve, or trace lines, like this:

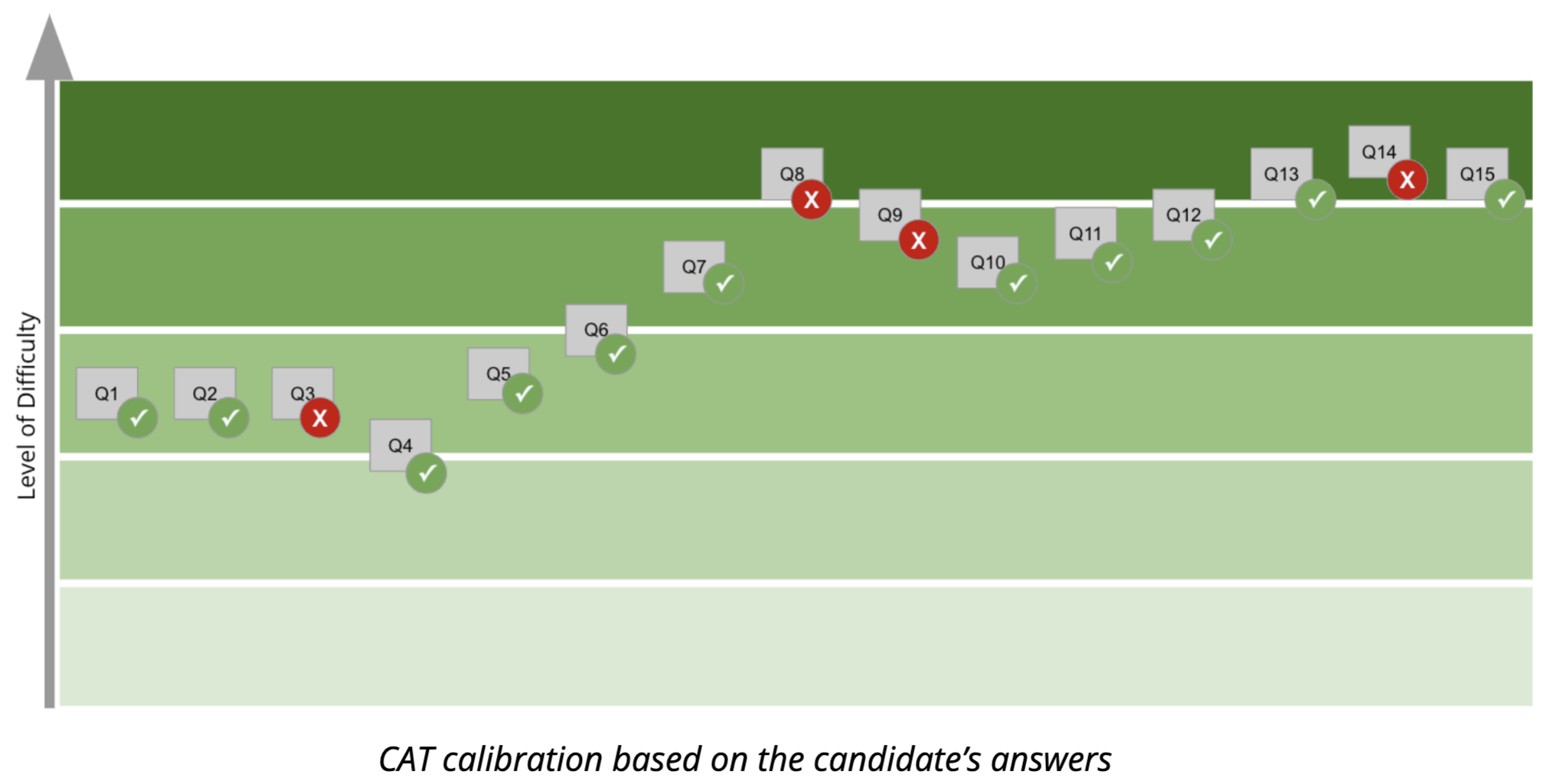

Using CAT to enhance assessment precision

CAT combines candidate responses and the calibration of each question as inputs to estimate the candidate’s ability for the skills that are being evaluated.

This adaptive approach recomputes the estimate after each individual response and uses that updated estimate to provide information about what kind of question should be asked next. This allows new questions to fine-tune the estimation. For example, if the candidate is at a higher proficiency, harder questions are asked to continue to refine the estimation (and vice versa).

Putting a new approach into practice

We have applied this approach to Karat Qualify, our adaptive assessment technology that screens large pools of candidates at the top of a hiring funnel. Karat Qualify is a 15-question exercise that is self-administered and available to candidates on demand. It screens an applicant’s abilities for specific software development languages or domains, including front-end development, Python, JavaScript, machine learning, and more.

We have run simulations to ensure this assessment system is evaluating candidates accurately. By estimating candidate ability levels via a simulation, we compared Qualify’s estimates on generated test candidate responses to see if the technology accurately adapted to the candidates’ abilities. These results helped us review and refine Qualify’s accuracy.

We also performed higher-level validation, comparing the scores of real candidates who answered the Qualify questions and also attended an interview. We confirmed that candidate scores on the Qualify questions were well-correlated to their interview results — that is, candidates who scored well on Qualify were more likely to also succeed in a technical skills interview.

For test-retest reliability (a measure of reliability obtained by administering the same test multiple times over any given period), we simulate a set of candidates and have each candidate take Qualify quizzes twice. We measure the correlation between the two scores for each candidate in a correlation plot. A reliable quiz will have a strong correlation between the two quizzes because candidates should be getting similar scores, assuming their ability hasn’t changed.

For criterion validity, we take a set of simulated tasks for candidates of various abilities and ensure that they have the same corresponding scores on our assessments. If we are measuring the right benchmarks, test candidates at varying levels of ability would have corresponding scores on a Karat Qualify assessment.

Final thoughts

Ultimately, we aim to unlock opportunities for everyone – candidates, customers, and industry. Offering products and services that focus on pure talent and skill – rather than the pedigree bias associated with resume screenings – furthers that endeavor. Commitment to this purpose, when combined with products and services that showcase the skills of professionals who are often overlooked, under-estimated, and under-represented, has the potential to have a positive impact for years to come.